How human is too human?

In the movie Blade Runner 2049, K uses an AI hologram that acts as a digital companion and wife in his house. Although she is an AI, she displays human like errors, such as being fussy and struggling when trying out a new recipe for dinner. Later, the company that produces these AI asks K if he is satisfied with his product. He claims, in a rather uneasy manner, “She’s very realistic” (Fancher 2017).

In anticipation of an era with advanced human-robot interaction, robotics professor Masahiro Mori proposed a relationship between human affinity to the human-likeness of a robot. The uncanny valley, as pictured below, illustrates this relationship.

(Mori 2012)

In this diagram, Mori suggested that humans are increasingly attracted to human-looking robots, until the point where the robot looks just shy of the looks of a real, healthy human. Within this range of appearance lies the uncanny valley, where human affinity for the robot takes a dip. Some researchers theorize that the valley is due to human discomfort with human-like figures resembling dead people. In the above example from Blade Runner, K’s digital companion lies far right of the valley, as she is very close to resembling a completely real, healthy human. As Mori’s propositions focused around the aesthetics of a robot, my research will consider the other element of human-likeness: behaviour. When we consider making a robot more human-like in terms of looks, we should also consider what makes them human-like in terms of behaviour, particularly their displays of intelligence/rationality. As the trend of designing human-like robots increases, we need to carefully weigh the consequences on human-robot interaction. Research can point us in a direction that helps us best design androids that create strong relationships between themselves and customers. Many of these androids can be used in homes, schools, and hospitals, interacting with vulnerable populations in vulnerable spaces. Thus, it is important to create interactions that foster trust and care, that will ultimately benefit humankind. I will propose an experiment that allows us to find the points of comfort in interacting with androids with varying degrees of human-like behaviour, helping us create a behavioural version of the uncanny valley. The words robot and android will be used interchangeably, android being a robot with a human appearance.

The anthropomorphizing of a robot’s physical design and behaviour is currently trending as a means to ease human-robot interaction as they enter our more personal spaces (Duffy 2003). Robots are being developed for use in several categories including social robots and companions, caretakers, and laborers. In order to have optimal interactions between robots and humans, it is important to create a design that will facilitate trust, empathy, and ultimately, companionship.

Several studies have highlighted the effect anthropomorphizing of robots has on human-robot interaction. In a study measuring human empathy towards anthropomorphic robots, participants were shown video clips “featuring five protagonists of varying degrees of human-likeness”. In each video, the featured android was involved in emotionally provocative situations, and the participants’ empathy was measured towards the protagonists in a post-video survey. Researchers concluded that people showed more empathy toward human-like robots and less empathy toward mechanical-looking robots (Riek 2009).

There is also neuroimaging support for these findings. Krach et al observed brain activity as humans interacted with robots of varying anthropomorphic features while playing a game. Their experiment showed that as participants partnered with game partners that were more human-like, their cortical regions corresponding to the classical Theory of Mind network were increasingly engaged, specifically the right posterior superior temporal sulcus (pSTS) at the temporo-parietal junction (TPJ) and the medial prefrontal cortex (mPFC) (Krach 2008). According to the Encyclopedia on Early Childhood Development, “Theory of mind refers to our understanding of people as mental beings, each with his or her own mental states – such as thoughts, wants, motives and feelings. We use theory of mind to explain our own behaviour to others, by telling them what we think and want, and we interpret other people’s talk and behaviour by considering their thoughts and wants” (Astington 2010). This suggests that the participants in this study acknowledged a human-like mental state of the anthropomorphic robots. The researchers concluded from the post experiment questionnaire that the participants found the human-like robots more sympathetic and pleasant to interact with (Krach 2008).

With strong support from the research above and many other similar studies, top tech companies are competing and prioritizing the implementation of anthropomorphic designs in their robots. Hanson Robotics is a Hong-Kong based engineering company that has been recently popularized after showcasing their advanced human-like robot, Sophia. Their goal is to “humanize AI” and to create emotional connections between androids and humans. Their website states their mission, "Hanson AI develops cognitive architecture and AI-based tools that enable our robots to simulate human personalities, have meaningful interactions with people and evolve from those interactions” (Sophia). Sophia will be used as a tool to explore human-robot interactions, particularly with service and entertainment purposes. With a combination of symbolic AI, neural networks, expert systems, machine perception, conversational natural language processing, adaptive motor control and cognitive architecture, and other technologies, Sophia is well equipped to have fully automated interactions with humans and becoming the ultimate human companion (Sophia).

Putting aside this recent obsession with robotic appearances, new studies are attempting to address the behavioural side of this movement to anthropomorphize robots. These studies are exploring the effects of robot errors in social interactions, like speech errors, task errors, and social errors. Mirnig et al. conducted a human-robot interaction user study where they purposefully implemented faulty behaviour into a robot. This was motivated by the idea of the Pratfall Effect, which states that peoples’ attractiveness increases when they make a mistake (Mirnig 2017). Participants were given a LEGO building task in which they were required to follow a robot’s instructions. Faulty robots would provide flawed instructions, and human reactions were noted as compared to following perfectly performing robots. Mirnig et. al found that, “the faulty robot was rated as more likeable, but neither more anthropomorphic nor less intelligent…participants liked the faulty robot significantly better than the robot that interacted flawlessly.” (Mirnig 2017). It is interesting to note here that faulty behavior was preferred, independent of the perceived human-like appearance of the robot.

My research proposal is largely inspired by the above study. Like with any emerging technology, we won’t know the true long-term effects of it until we conduct longitudinal research. One limitation to studies of this nature is not being able to obtain robots that are human-like enough in appearance. Advanced AI and machine learning technology are also required, which is expensive and time consuming to develop. Ideally, we can experiment with androids that can build meaningful relationships with humans by being able to learn and mirror emotions and behaviour. Mirnig et al. referred to a paper stating, “Since robots in HRI are social actors, they elicit mental models and expectations known from human–human interaction (HHI)” (Lohse 2011). We need to treat these androids as if we are “training social actors” and use technology that mimics humans as close as possible, in both appearance and behaviour. Then, we can observe and collect data from human-android interactions over longer periods of time to draw more accurate and more generalizable conclusions than previous studies. This proposal is becoming increasingly possible thanks to the work done by companies like Hanson, as Sophia is designed to help conduct research of this nature. With a few more years of funding and developing technology like Sophia’s, we can implement the following experiment.

This experiment will be an exploratory study designed to investigate human affinity to varying degrees of faulty robots. We would pair participants with a social/companion android that can perform a variety of basic tasks around the house in addition to providing companionship. Data will be collected over a course of five years to provide enough time for a significant human-robot relationship to develop. All androids should be as anthropomorphic as possible, using Hanson’s Sophia robot as baseline, as she is currently the most functional human-looking robot. However, the androids will be categorized in the following three experimental groups:

Type I: Perfectly rational robot, no faulty programs

Type II: Robot programmed with same error rate as avg human

Type III: Robot programmed with worse error rate than avg human

The goal is for the robots to resemble human appearance and mimic human behaviour as close as possible, so we can determine the behavioural thresholds in which human-robot interaction is optimized. So, the types of errors the robot will make will closely resemble everyday errors a human would make, such as the following:

Ex 1. Blade runner example: Robot tries a new recipe for dinner and fails

Ex 2. Social cue example: Robot asks to repeat what the human said, as if it didn’t hear properly

Ex 3: Task example: Robot brings participant a glass of milk when asked for a glass of water

The nature of these errors should pose minimal harm to the participants, and at most cause mild annoyance. For example, we wouldn’t want a participant to miss an important meeting at work because the android gave them the wrong time.

The rate at which these errors are made should be determined with preliminary studies conducting natural experiments in which social and task errors are observed among average, healthy humans.

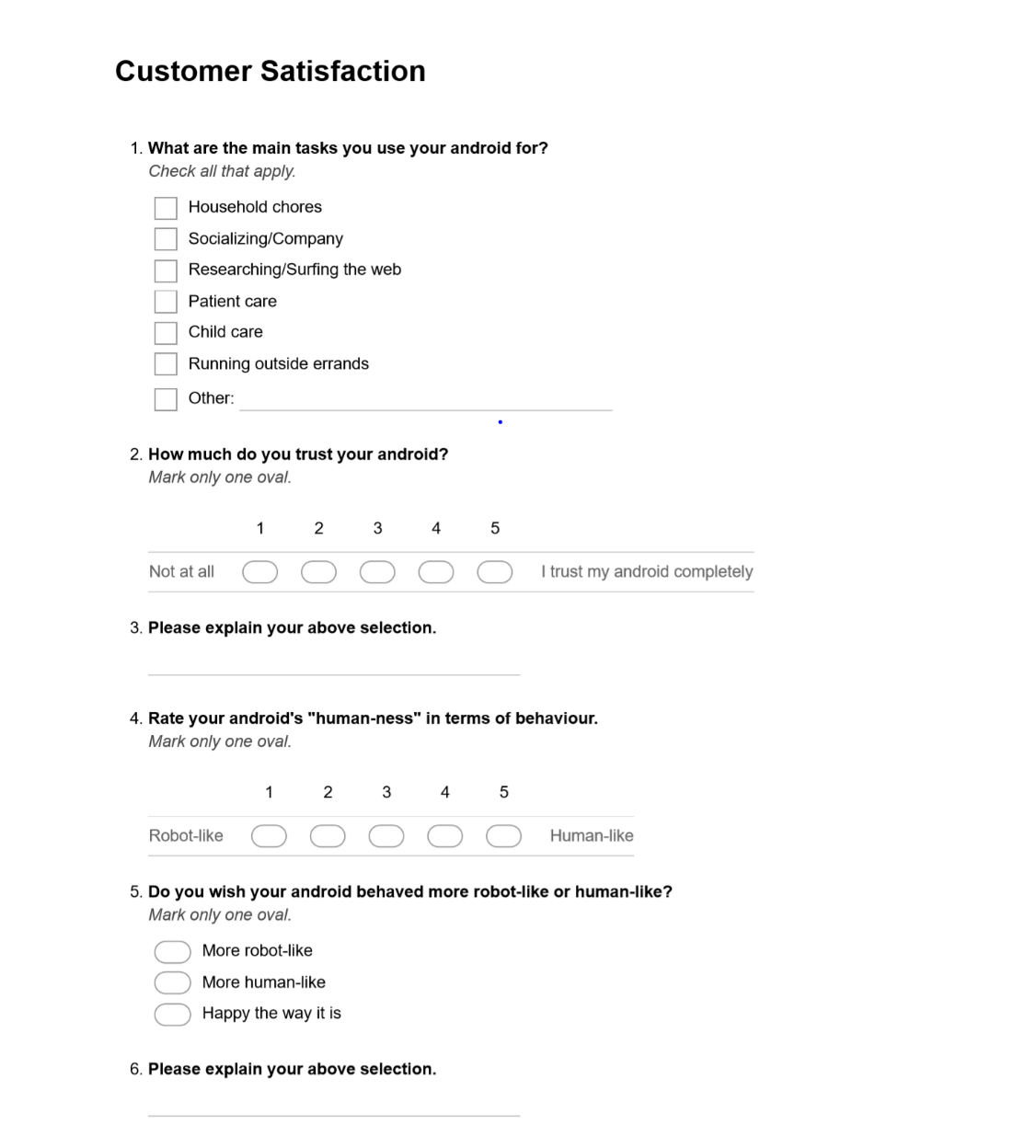

Surveys will be conducted on a bi-annual basis to measure perceptions of the given robot (exploring a range of variables, including trust, perceptions of anthropomorphism, how much they like the android - as seen from Mirnig et al. study). Example questions are as follows:

https://forms.gle/rSdeaPvKrpYAkUic9

I would expect to see different intersections between variables in the survey, for example between tasks and degree of faultiness. The main tasks the android is used for could be related to the degree of faultiness. Once data is compiled, the different intersections should be aggregated so we can form correlations between the Types and perceptions determined from the survey. I hypothesize that humans would be most comfortable with Type II androids, as they resemble humans the most. An alternative outcome is that participants may develop positive relationships with Type III androids, as they make more mistakes but can bond through more learning experiences, similar to a parent-child relationship.

There are several limitations in this study that can be improved when designing future research. We would need to closely monitor any changes to participants’ responses on the surveys conducted throughout the 5 years. It is possible that participants could get used to their android and the familiarity alone can change their perceptions. Also, participants could have varying levels of dissatisfaction with their androids depending on their Type, which may cause them to spend disproportionate amounts of time interacting with them.

It would be best to narrow down to one or two dependent variables. For example, we can measure different relationships, like anthropomorphism and trust building, or human-like behaviour and tasks given. Or, we can find correlations between a robot’s purpose and anthropomorphism: is it unsettling to have a human-like robot do labourous tasks for a human? Is it better to keep robot-looking robots do labourous tasks, and have human-like robots act as companions, strictly speaking? Did users get uncomfortable sending human-like androids to do tasks for them, as if they were human slaves? Data from the surveys can be used as preliminary research for these questions.

There are ethical dangers we must take into consideration when humanizing robots. As trust is formed, we will slowly develop emotional and sentimental connections to our androids and adopt them as one of our own, as shown from Krach et al’s Theory of Mind experiment. Further research is needed to compartmentalize android tasks and roles in society, and to determine the rights and regulations between human-robot interactions.

References

Astington, Janet Wilde, and Margaret J. Edward. “The Development of Theory of Mind in Early Childhood.” Encyclopedia on Early Childhood Development, 2010.

Duffy, Brian R. “Anthropomorphism and the Social Robot.” Robotics and Autonomous Systems, vol. 42, no. 3-4, 2003, pp. 177–190., doi:10.1016/s0921-8890(02)00374-3.

Fancher, Hampton, and Michael Green. “Blade Runner 2049 (2017).” Screenplayed, 7 Aug. jlkjklc2018, screenplayed.com/scriptlibrary/blade-runner-2049-2017.

Krach, Sö, et al. "Can Machines Think? Interaction and Perspective Taking with Robots kl ddklklInvestigated Via fMRI." PLoS One 3.7, 2008 ProQuest. Web. 10 Dec. 2019.

Lohse, M. The role of expectations and situations in human-robot interaction. New Front. Hum. kjkdjkjRobot Interact. 2, 35–56, 2011, doi:10.1075/ais.2.04loh

Mirnig, Nicole, et al. "To Err is Robot: How Humans Assess and Act Toward an Erroneous kejkjkSocial Robot." Frontiers in Robotics and AI, vol. 4, 2017.

Mori, Masahiro, et al. “The Uncanny Valley [From the Field].” IEEE Robotics & Automation Magazine, vol. 19, no. 2, 2012, pp. 98–100., doi:10.1109/mra.2012.2192811.

Riek, Laurel, et al. “How Anthropomorphism Affects Empathy Toward Robots.” ACM, 2009, jnjnjjjdoi:10.1145/1514095.1514158.

“Sophia.” Hanson Robotics, www.hansonrobotics.com/sophia/.